[06]

[06]

Envisioning bioarchitecture

Envisioning bioarchitecture

Year 2022

Tags architecture, visual art

Year 2022

Tags architecture, visual art

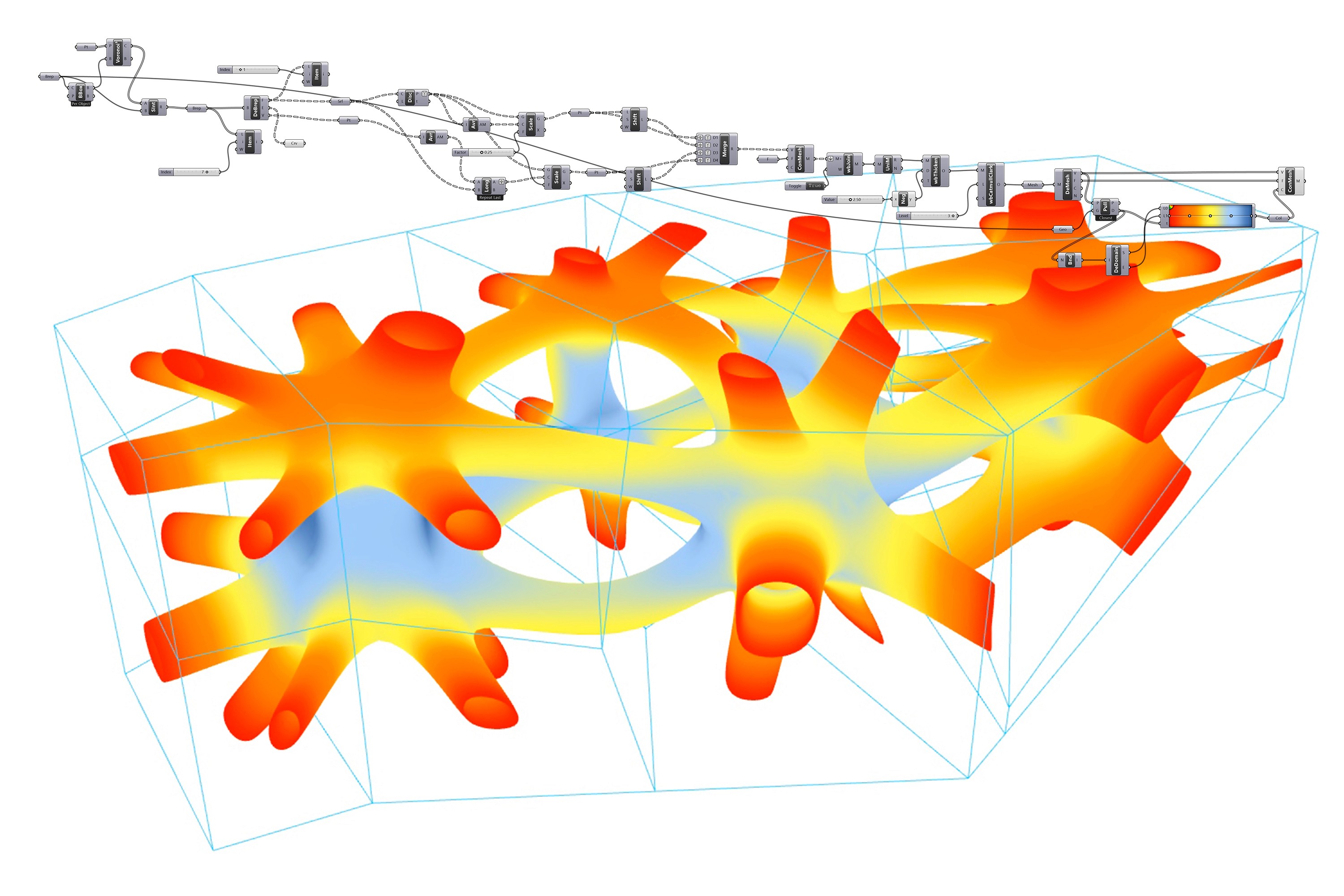

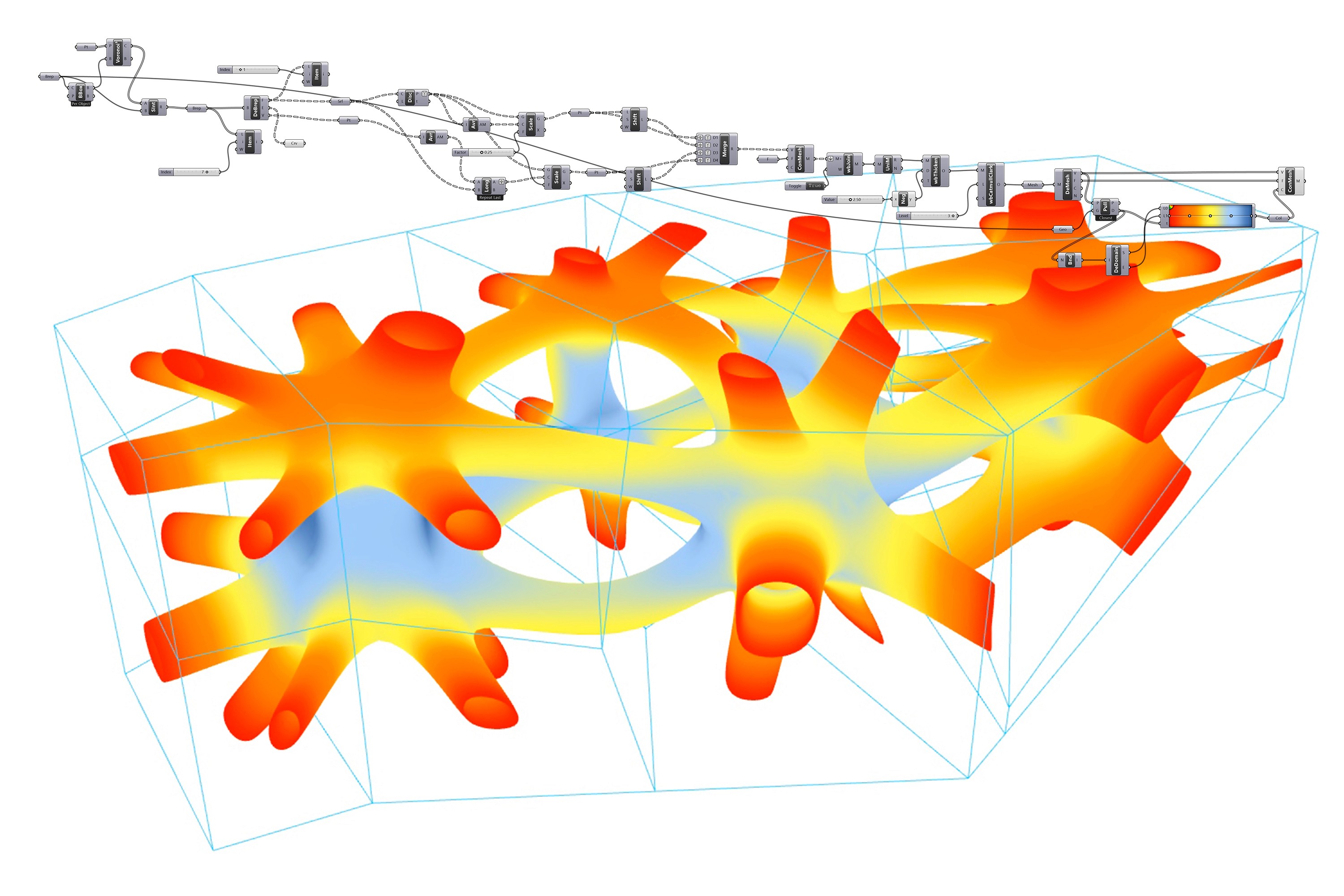

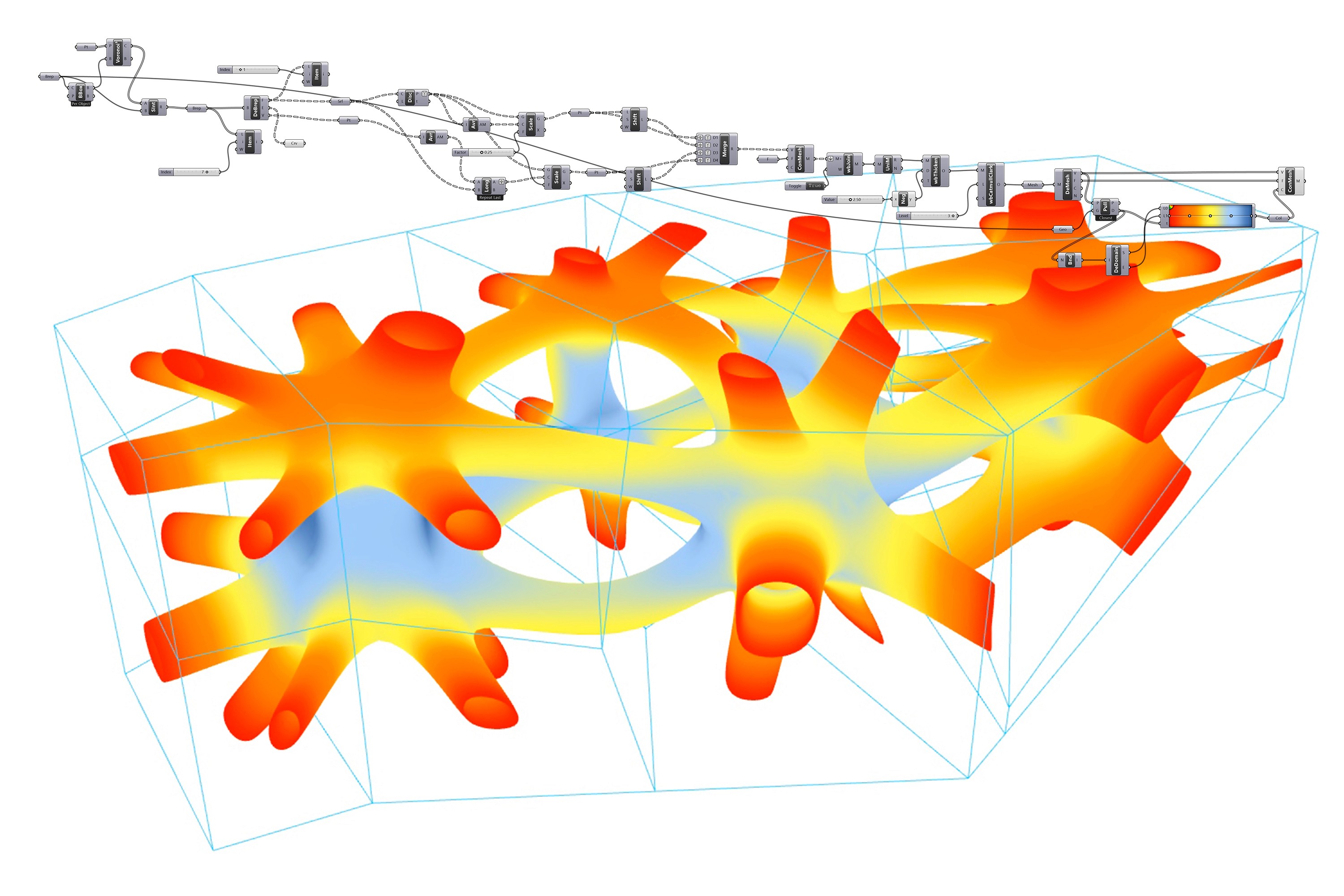

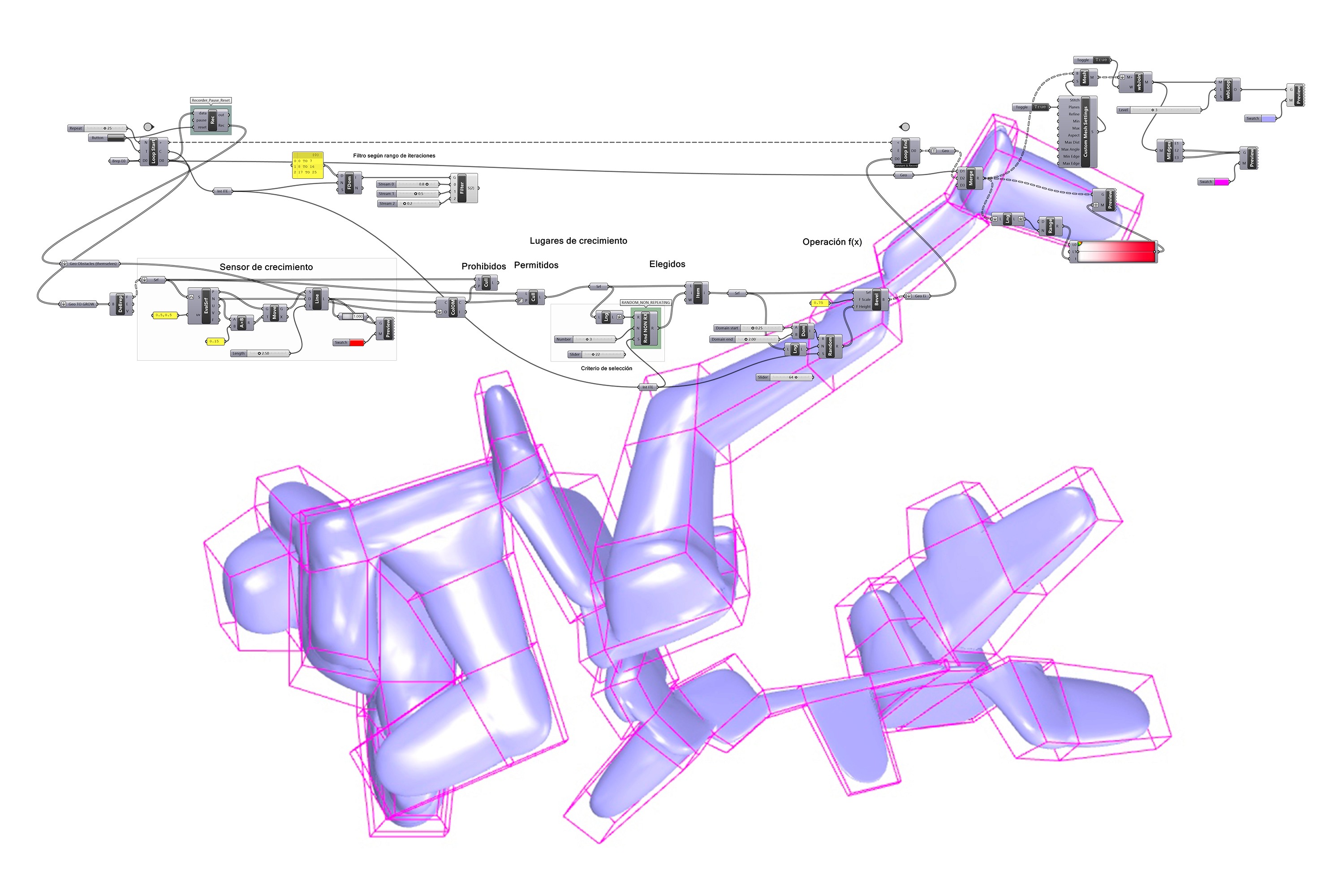

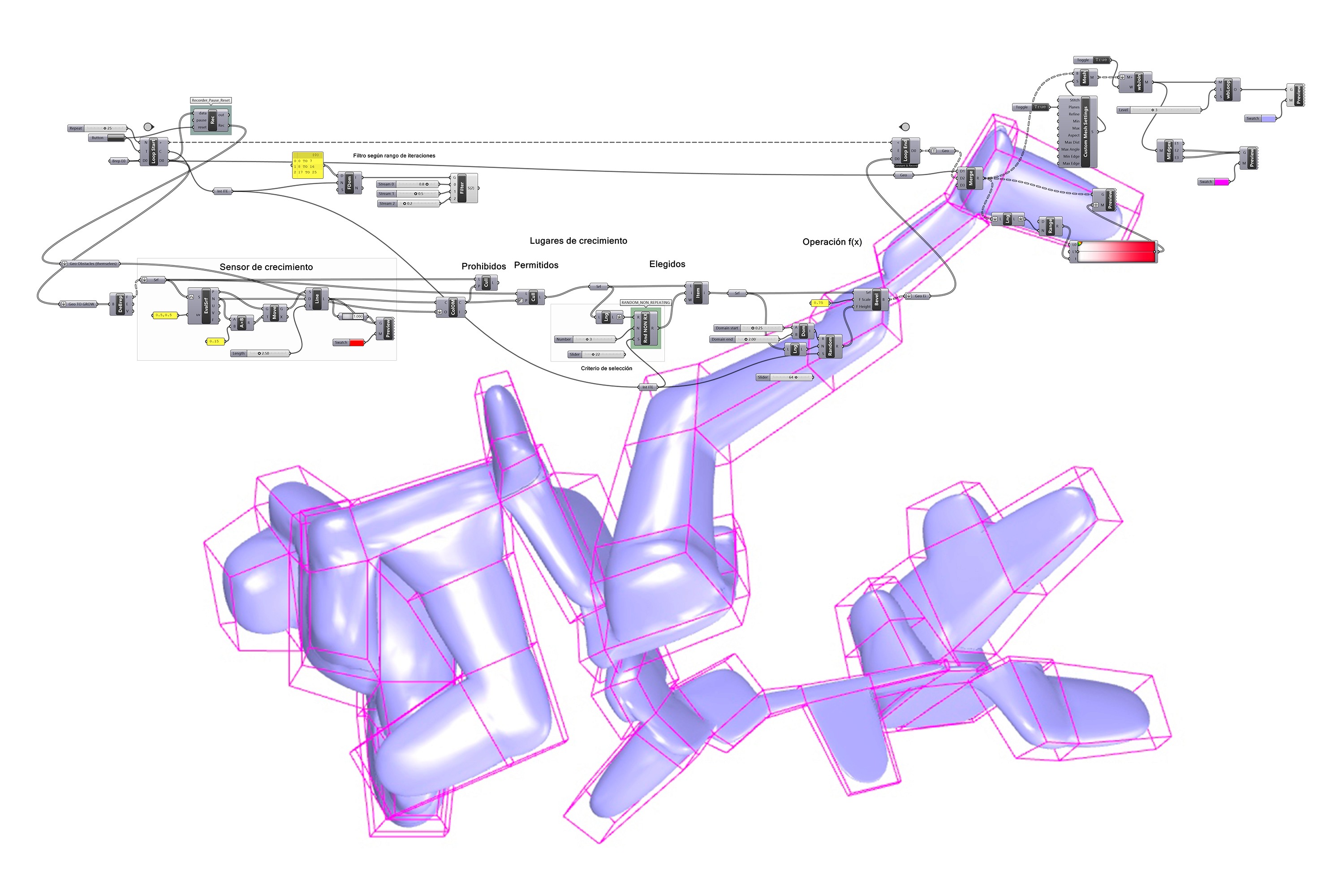

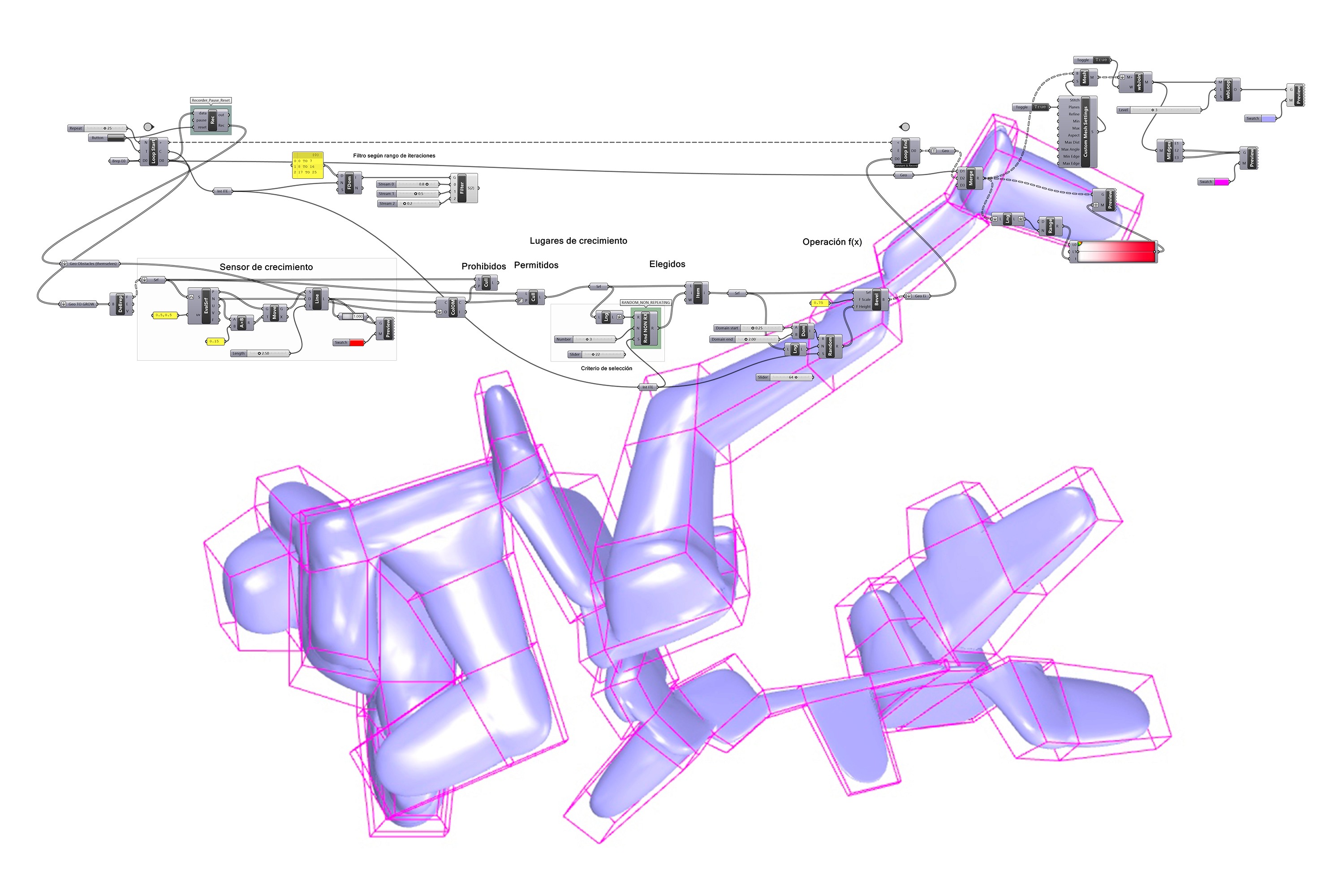

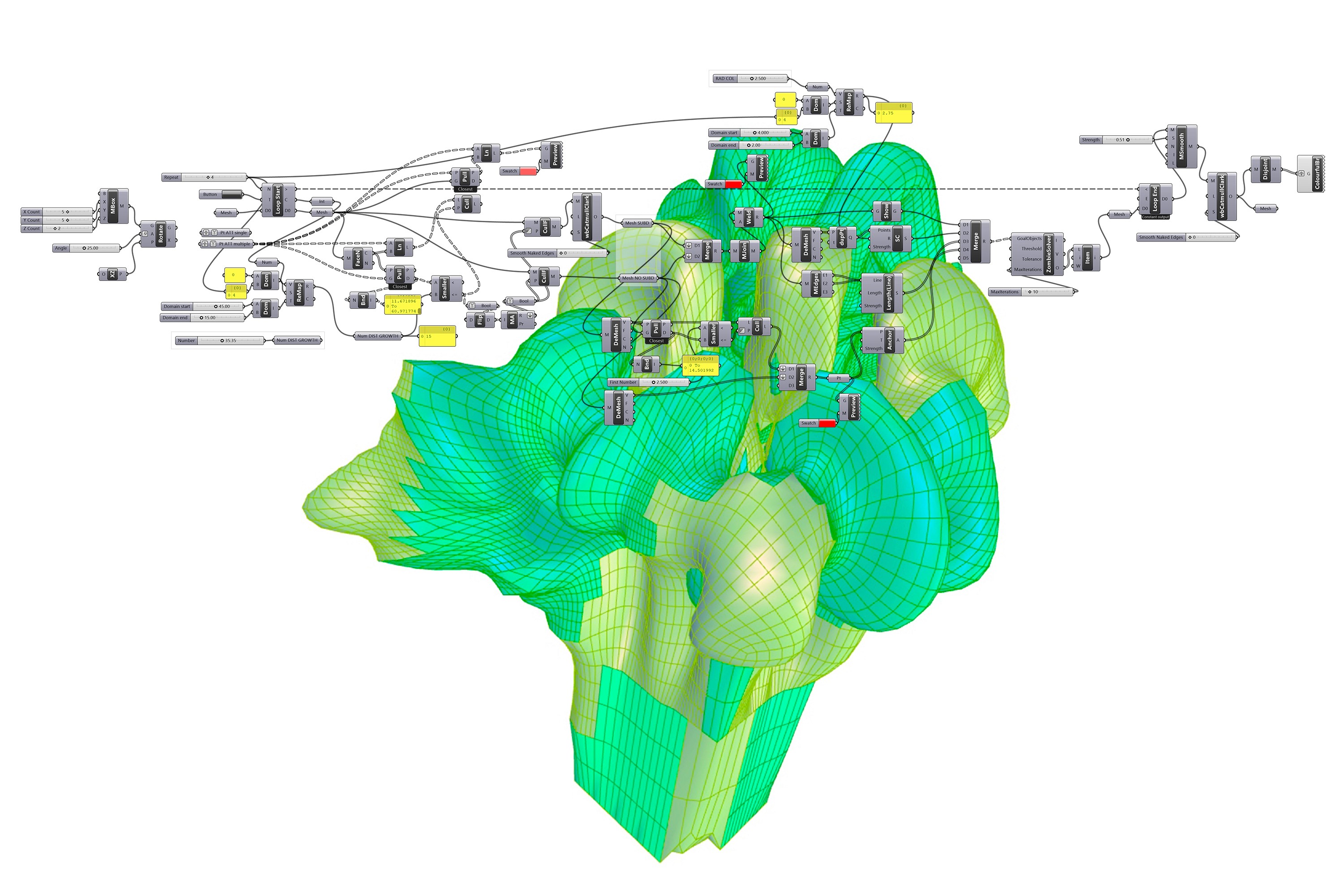

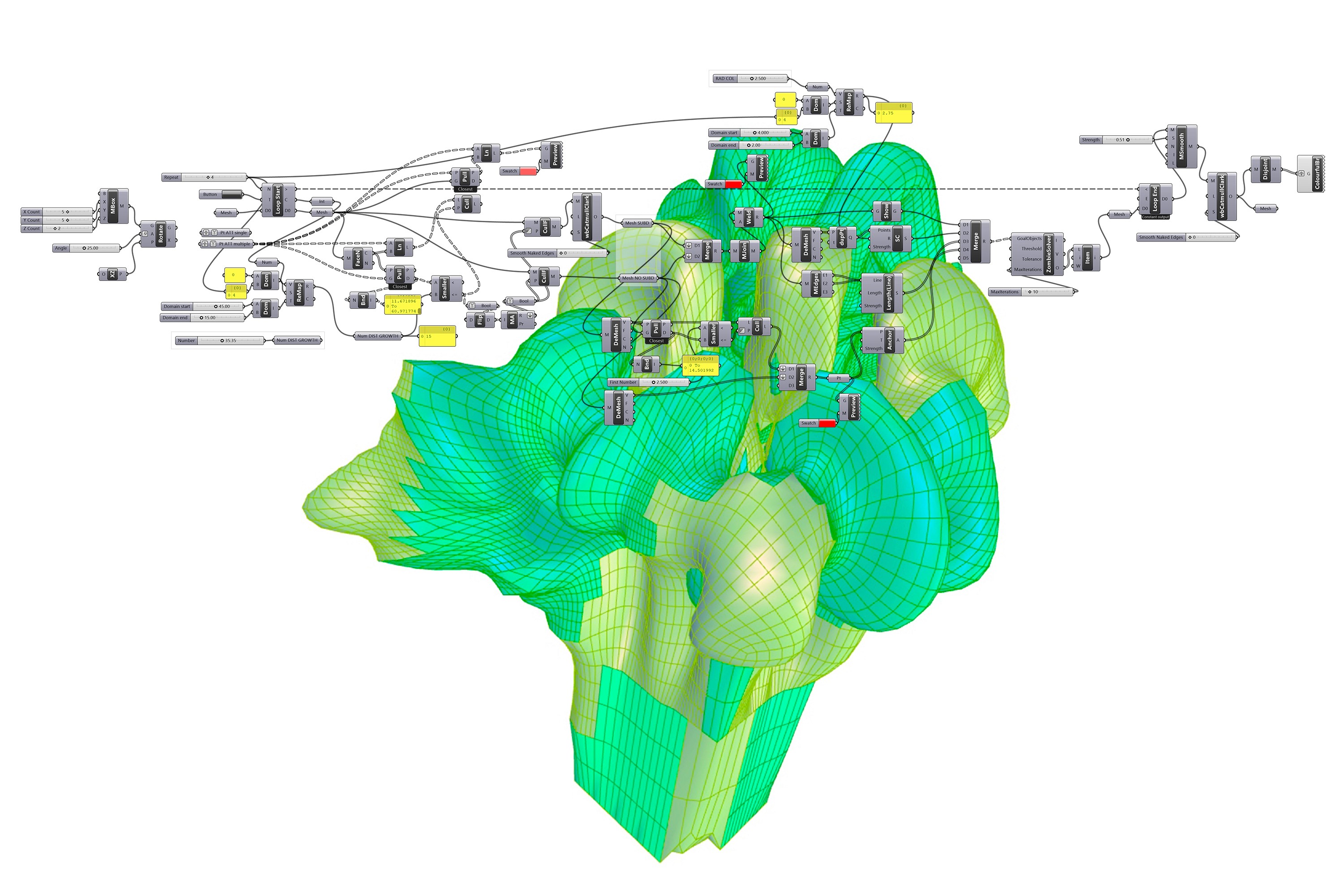

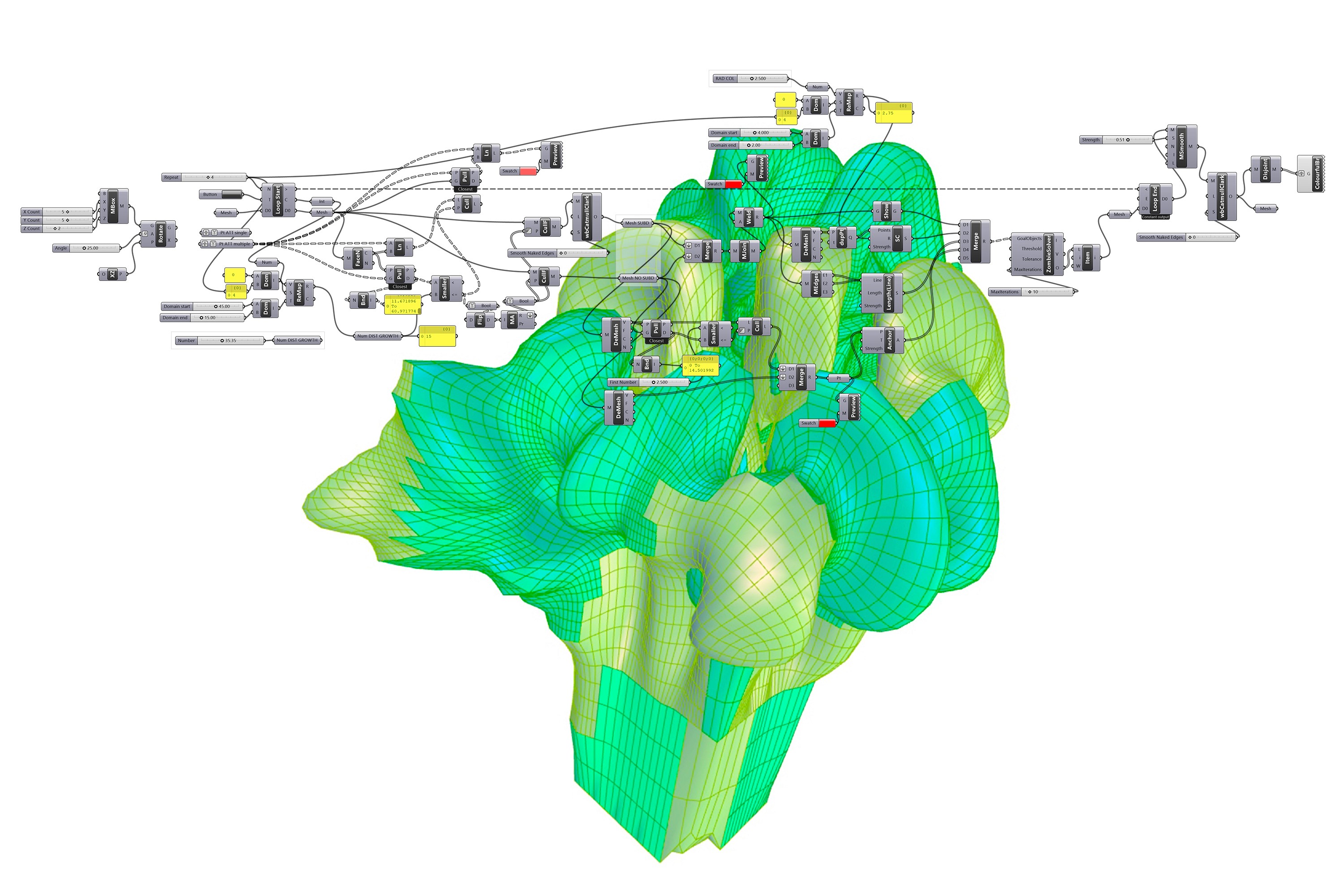

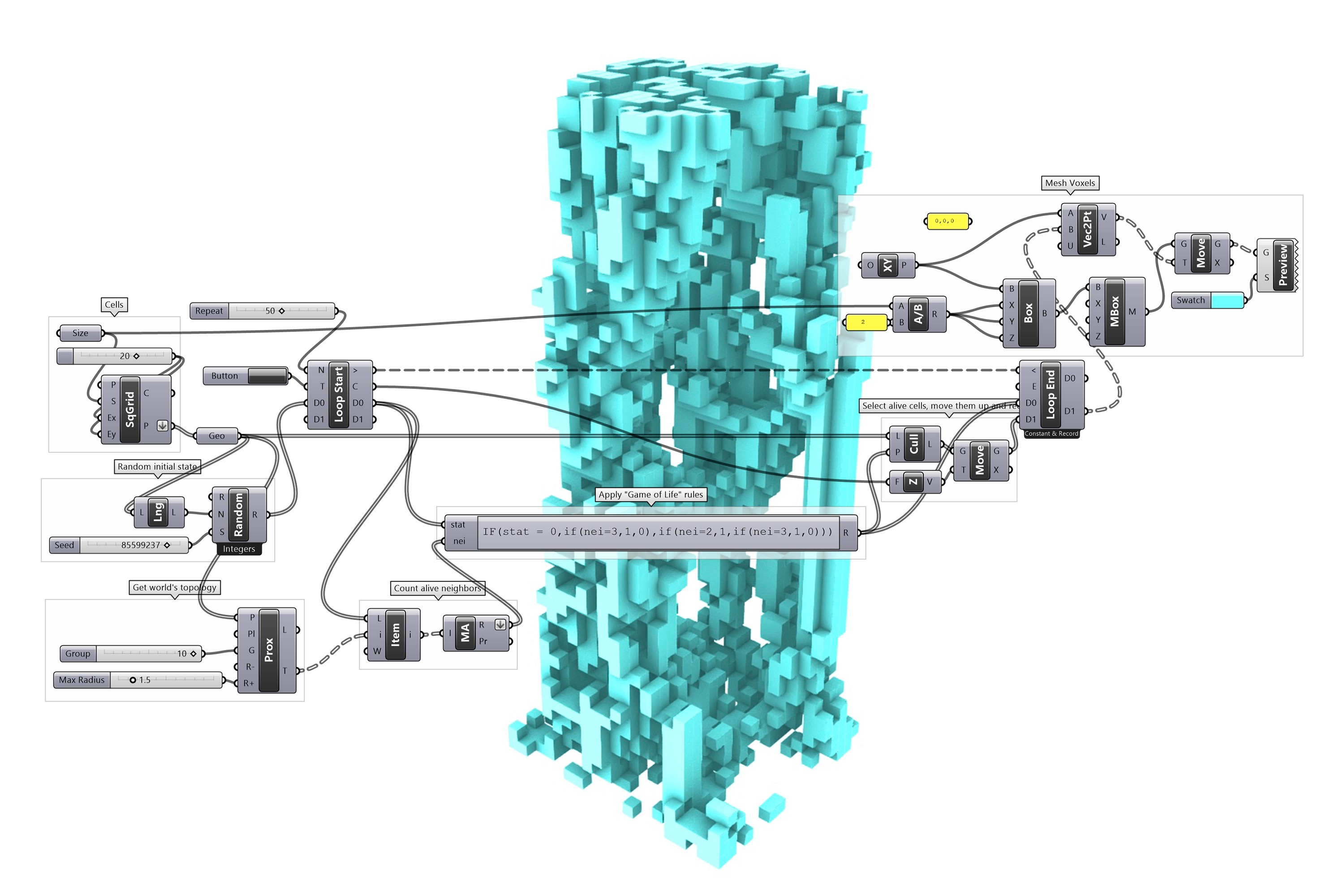

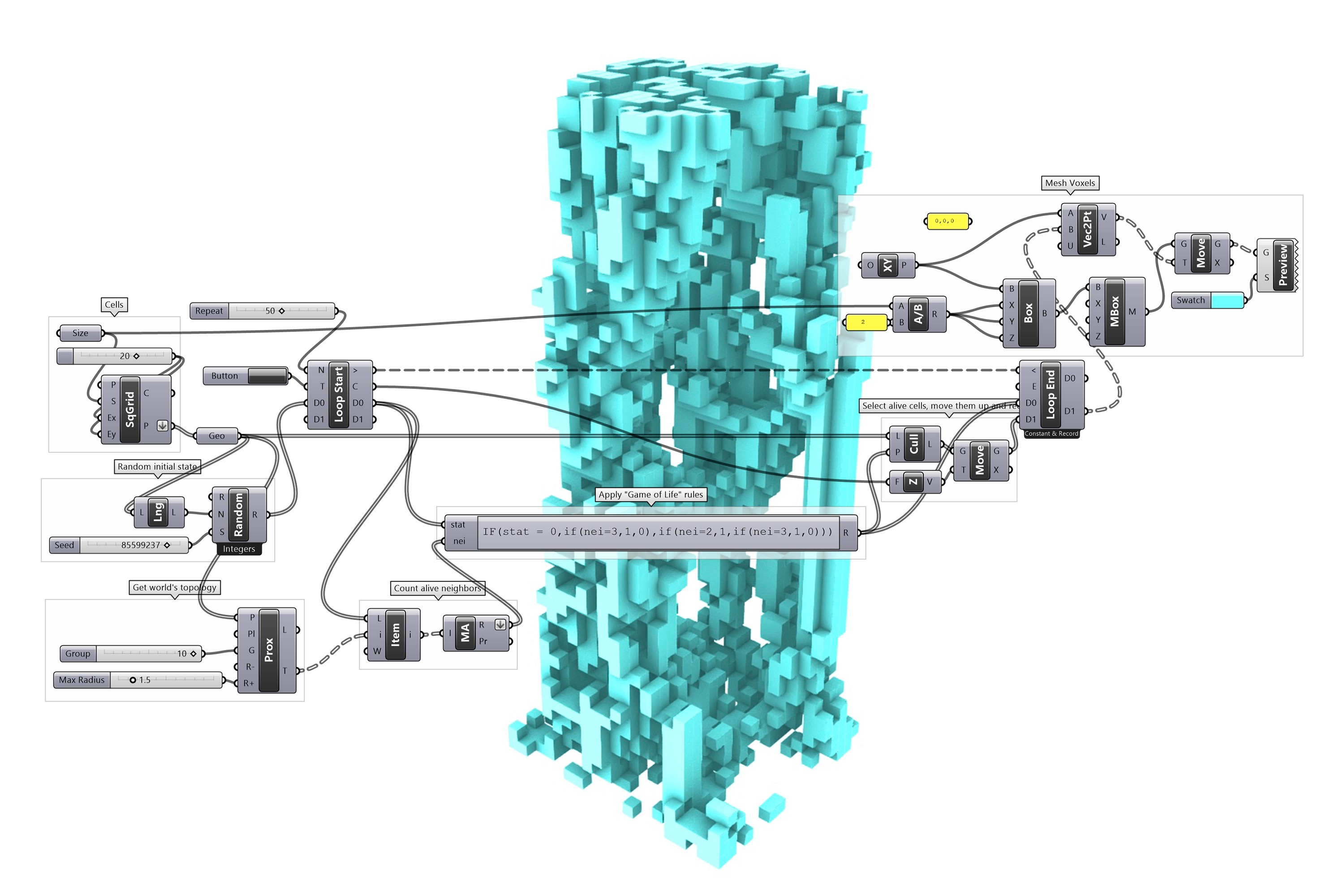

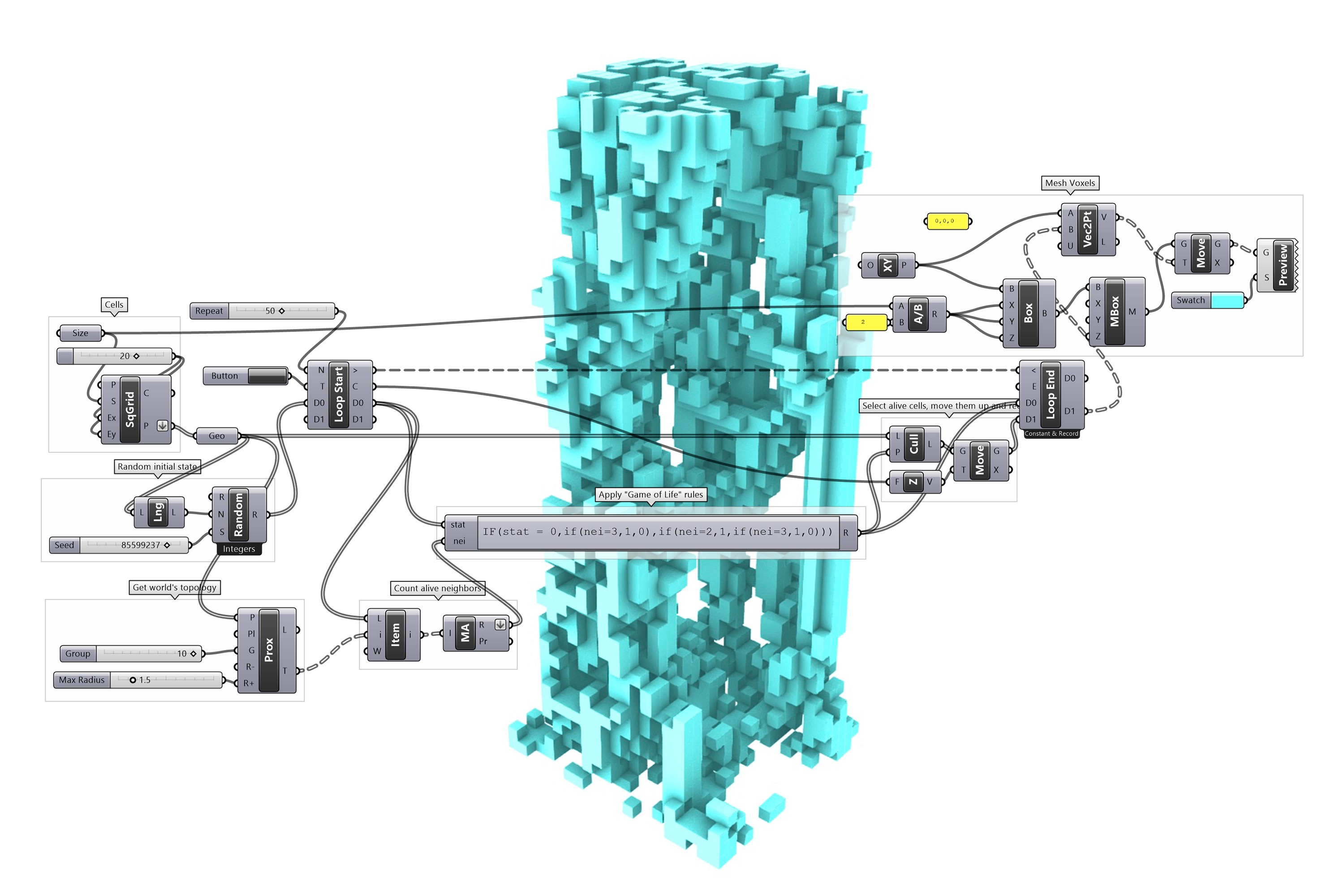

This individual research project involves using Grasshopper to carry out 3D form-finding processes and simulations. The aim is to create complex and intricate 3D models that can be used for various design projects which then can be analysed and optimised using simulations to ensure they meet specific design requirements. Fractality, growth and recursive iterations are guiding the investigation since I believe understanding biological shapes and structures is a key aspect to imagine the future of architecture and design.

Once the 3D models are defined through iterative stochastic loops, I generate depth-fields that then are used in Stable Diffusion, a text-to-image AI. The interpretation of the space is possible due to the extension ControlNet, which allows much more control and manipulation to define precisely the output that I want to obtain. By following this methodology, the process of rendering 3D models is significantly faster and more efficient which allows to produce high-quality visualizations that accurately represent the design intent.

Overall, the project combines the power of Grasshopper for 3D form-finding and simulation with Stable Diffusion for quick and accurate rendering. The end result is a streamlined workflow that produces stunning designs in a fraction of the time it would take using traditional design methods.

This individual research project involves using Grasshopper to carry out 3D form-finding processes and simulations. The aim is to create complex and intricate 3D models that can be used for various design projects which then can be analysed and optimised using simulations to ensure they meet specific design requirements. Fractality, growth and recursive iterations are guiding the investigation since I believe understanding biological shapes and structures is a key aspect to imagine the future of architecture and design.

Once the 3D models are defined through iterative stochastic loops, I generate depth-fields that then are used in Stable Diffusion, a text-to-image AI. The interpretation of the space is possible due to the extension ControlNet, which allows much more control and manipulation to define precisely the output that I want to obtain. By following this methodology, the process of rendering 3D models is significantly faster and more efficient which allows to produce high-quality visualizations that accurately represent the design intent.

Overall, the project combines the power of Grasshopper for 3D form-finding and simulation with Stable Diffusion for quick and accurate rendering. The end result is a streamlined workflow that produces stunning designs in a fraction of the time it would take using traditional design methods.